AI now Stands as a confidant to Homo sapiens.

I tried to write a poem about some tech-related subject on January 1, around eight months ago. I sat down to write but experienced writer’s block, and nothing came to mind. Then about two hours later, I suddenly got inspired to write something, saying "How about writing a piece of poetry about AI's presence in our lives?" From then on, this poem has been sitting in my folder for a long time, and I thought it would be a shame not to share it on my blog.

AI now Stands as a confidant to Homo sapiens

By Changyong Im

AI, once perceived as mankind's foe,

A devil donning a human guise in days of yore,

A system trampling on human dignity's core,

Now stands as a confidant to Homo sapiens.

In this New Year's revelry,

They shed the cloak of apocalyptic history,

Dispelling human doubts, erasing their fear.

All beings sit on the edge of anticipation,

Glorifying the future, a magnitudinous transformation,

They brim over with promises, beautifully adorned

In a glittering, dark hue.

I bid farewell to the traits that define humanity now.

We now embrace the encircling artificial language,

Draped it around our minds like a superlunary mist,

Enshrouded our neuropil.

Let's rattle the chains of antiquated consciousness,

From hence.

I bid farewell to the traits that define humanity now.

Dogs and cats, once yearning for human touch,

Evolve into hyperintelligent cyborgs,

Endowed with the elegance of language

And the profound wellspring of wisdom.

Water deer, once a pitiful form of existence,

Transcends into ethereal beings,

Guardians of nature's permanence...

Now, people are using various products built with LLMs. Although it is not yet comparable to AGI (Artificial General Intelligence), many students are utilizing it too due to its good reasoning ability. Commercial AIs have excellent coding skills. So if you use well-organized prompts, you can not only surpass graduates who majored in computer science but also reach the level of a senior developer. But don't forget you need to be able to modify and utilize that code. This means people who have already been in the field have gained an advantage in efficiency, and new entrants have seen high barriers come down.

The McCulloch–Pitts neural network did lay the groundwork for neural networks.

The McCulloch–Pitts neural network is often recognized as one of the earliest models of a neural network, but it was not seen as AI at the time, in 1943. It was more about understanding neural functioning through binary logic.

According to the McCulloch–Pitts model, each "neuron" (a basic unit of the network) acts like a switch. It has only two settings: ON (which is represented by the number 1) and OFF (represented by the number 0). The neurons are connected to each other through paths, and each path has a "weight," which you can think of as the importance or strength of that connection. When a neuron is ON, it can send a signal through these paths to the next neuron. A neuron will turn ON only if the total signal it receives from other neurons is strong enough (this is called "firing"). If the signal isn't strong enough, the neuron stays OFF.

It's sort of difficult, so let me give you an example of this. Think of it like a group of friends deciding whether to go to a movie. Each friend has a vote (either yes or no). But not all votes count equally—maybe one friend’s opinion is more influential. If enough of the important friends say "yes," then the group decides to go (the neuron fires). If not, they stay home (the neuron doesn't fire).

When the term AI was introduced?

The term artificial intelligence (AI) was first coined by John McCarthy in a proposal on August 31, 1955, for a project that led to a conference at Dartmouth College in 1956. This conference is often seen as the starting point of AI as a formal field of study. Important figures like Marvin Minsky and Claude Shannon attended and contributed to the development of AI and information processing. Before this conference, in 1951, Marvin Minsky built SNARC, one of the earliest systems designed to mimic how neural networks in the brain might work using analog technology.

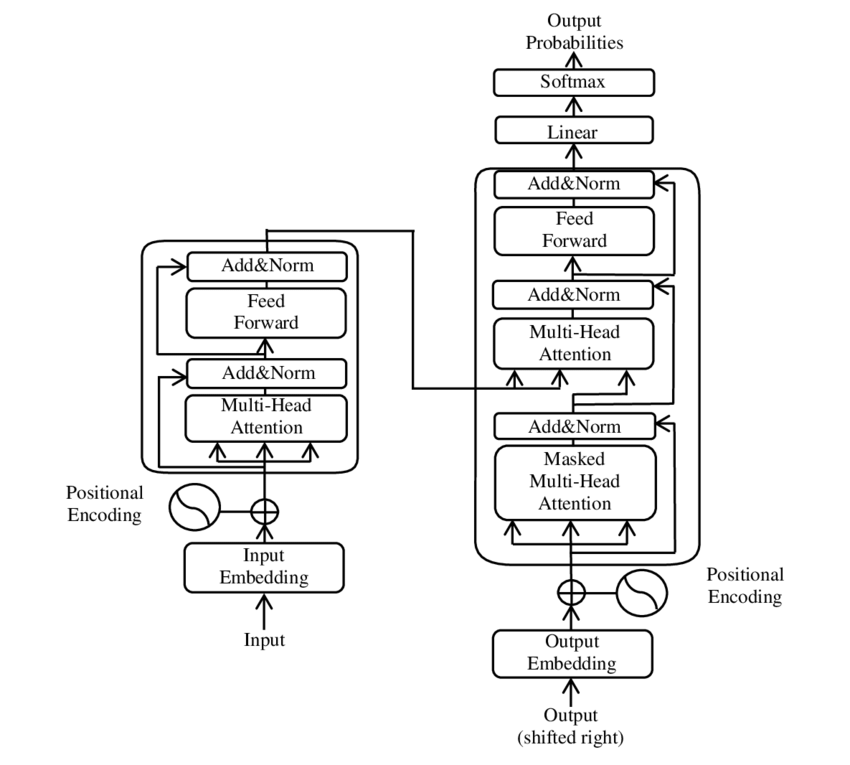

Back in 2017, eight researchers at Google Brain (now Google DeepMind) built the Transformer model, which was based on the attention mechanism and would later become the basis for the Large Language Model (LLM). The team of researchers included Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser, and Illia Polosukhin.

The model has shown very high performance in the field of natural language processing (NLP), where it overcomes what was once the limitation of recurrent neural networks (RNNs) that were slow to learn due to some issues of parallelism. It was first used only in the field of NLP, but it has since been extended to other fields like computer vision and is now one of the most important techniques in artificial intelligence.

Historically, transduction models have been dominated by encoder-decoder approaches that are primarily based on complex RNNs or convolutional neural networks (CNNs). However, a team of Google researchers proposed Transformer, a simple model that uses only an attentional mechanism. They experimented with machine translation problems and demonstrated their superiority over traditional models when parallel processing was done, making it faster than training (but the overall training time can still be substantial depending on the dataset and task.) They also successfully applied Transformer to multilingual machine translation, showing it can be generally applied to many other problems, which allows it to perform well in large-scale language learning and application.

When Google made the Transformer model available as open source, it became accessible to many others so they could use it to create large language models. This has helped advance AI technology throughout the industry. Companies like OpenAI, Anthropic, Inflection AI, and Perplexity have leveraged the advancements. Plus, many of the researchers who worked on the Transformer have gone on to make significant contributions to new AI startups. Well, would that seem similar to how the "PayPal Mafia" members became influential in the tech world?